Overview

Any successful open source software project in this day requires some form of Continous Integration – the InstructLab CLI project is no different. We run many types of CI jobs today, including but not limited to:

- Python/Shell/Markdown Linting

- Unit, Functional, and E2E Testing

- Package Building and Publishing

In this blog post, we are going to focus specifically on E2E testing being done today for InstructLab – why we have it, what it covers, how it works, and what is next.

Why even have E2E testing?

The InstructLab CLI has several complex, compute-intensive operations – generating synthetic data, model training, model evaluation, etc. To ensure functionality for our users while also enabling the rapid pace of development typical of the AI community, automated E2E testing was a necessity.

The current E2E testing runs in two forms:

- Smaller-scale jobs against GitHub Pull Requests and Merges in our open source CLI repository that run lower-intensity workflows with smaller hardware footprints in shorter timeframes

- Larger-scale nightly jobs that run more compute-intensive workflows in longer timeframes using larger hardware footprints

The first form of job requires all linting actions in the Pull Request to be passing before these jobs trigger, to save on compute hours used.

The second form of job automatically runs nightly via Cron but can also be run manually by Project Maintainers against Pull Requests.

E2E Hardware Testing Today

Right now we are testing the latest bits for InstructLab against 3 types of NVIDIA GPU configurations. Why just NVIDIA? While InstructLab does offer hardware acceleration for platforms such as AMD and Intel, we have faced the same challenges many others in the larger AI community have in securing consistent hardware in which to run regular CI jobs.

Given these constraints, our team has focused mainly on building the foundation of our E2E CI infrastructure off NVIDIA, as we are able to take advantage of on-demand cloud instances offered by Amazon Web Services (AWS). Previously, we took advantage of GitHub-hosted GPU-enabled runners for these jobs, but found they lacked the options, flexibility, and cost-effectiveness our organization ultimately needed.

Below is a simplified chart from our CI documentation with an overview of our current coverage.

| Name | Runner Host | Instance Type | OS | GPU Type |

|---|---|---|---|---|

| e2e-nvidia-t4-x1.yml | AWS | g4dn.2xlarge |

CentOS Stream 9 | 1 x NVIDIA Tesla T4 w/ 16 GB VRAM |

| e2e-nvidia-l4-x1.yml | AWS | g6.8xlarge |

CentOS Stream 9 | 1 x NVIDIA L4 w/ 24 GB VRAM |

| e2e-nvidia-l40s-x4.yml | AWS | g6e.12xlarge |

CentOS Stream 9 | 4 x NVIDIA L40S w/ 48 GB VRAM (192 GB) |

The E2E Workflow

Our CI infrastructure, orchestrated through GitHub Actions, runs this testing via the following steps:

- Initialize an EC2 instance with access to one or more NVIDIA GPUs

- Install InstructLab with CUDA hardware acceleration and vLLM, if applicable

- Run through a typical InstructLab workflow orchestrated via our e2e-ci.sh Shell script

- Capture the test results and tear down the EC2 instance

- Report the test results to one or both of the following places.

- As a comment or CI status check within a Pull Request

- Our upstream Slack channel

#e2e-ci-results

Slack reporting

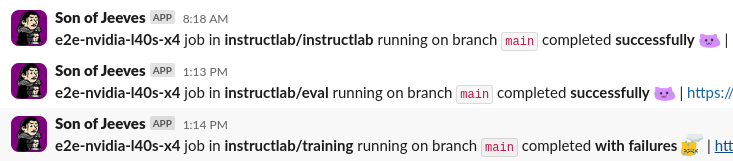

We send results from our nightly E2E CI jobs to the InstructLab Slack workspace channel #e2e-ci-results via the Son of Jeeves bot. This has been implemented via the official Slack GitHub Action.

Future plans

We have a lot planned for the InstructLab CI Ecosystem – to more in-depth testing to a greater hardware support matrix to more sophiscated workflow systems. For more details on any of these topics, please see our upstream CI documentation here, check out our CI/CD issues, and come join us over in the InstructLab community!

Attributions

While I have penned this blog post, we have had many contributors to the InstructLab CI – I wanted to call attention to the following folks who have been invaluable to this work:

- Ali Maredia, Senior Software Engineer, Red Hat

- BJ Hargrave, Senior Technical Staff Member, IBM

- Dan McPherson, Distinguished Engineer, Red Hat

- Russell Bryant, Distinguished Engineer, Red Hat

Nathan Weinberg is a Senior Software Engineer at Red Hat.